We Fixed Massive Indexation Issues To Recover Organic Traffic

This case study demonstrates how we fixed massive indexation issues and recovered their organic traffic.

B2C, Blog

1.5K+ Pages Indexed

< 4 month

Background

- Nature Of The Business

- Key Challenges

- Our Winning Strategy/Action Steps

- Results

- Time Frame

Nature Of The Business

Our client site was related to the food industry, where every foodie can discover recipes, cooks, videos, and how-tos based on the food they love. Helping create “kitchen wins” is what they’re all about.

Key Challenges

- A site was built on React JS, unarguably one of the most popular frameworks for creating rich interactive web apps. But there were a lot of concerns connected with its SEO-friendliness while dealing with crawling, rendering, and indexing.

- Page deindexation was a significant issue. More than 2K pages on the Google index, but only a handful were on the index.

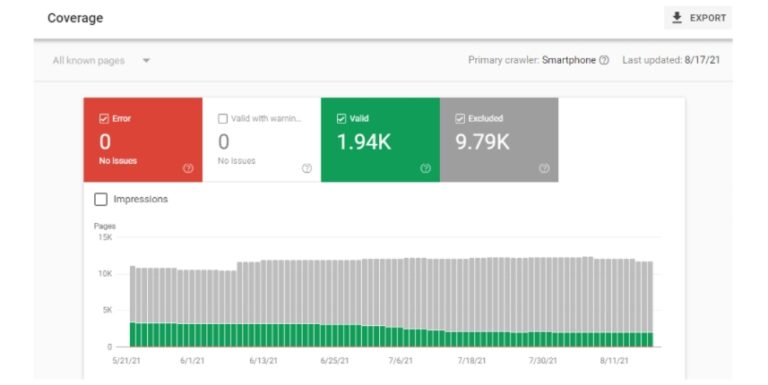

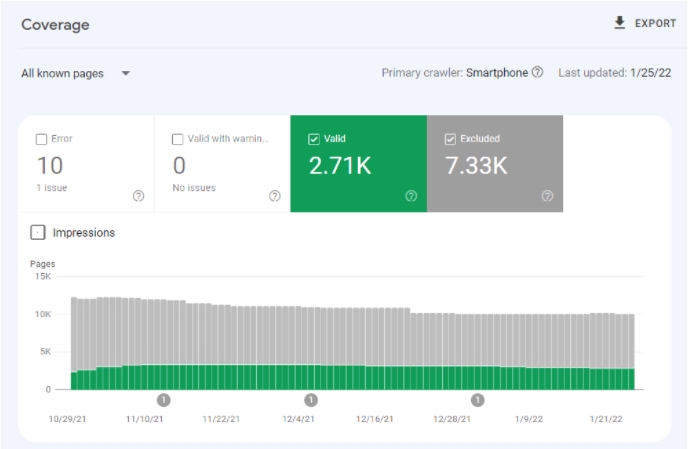

- The scary part was that the GSC index coverage trends showed a rapid deindexation of pages, negatively impacting their organic traffic. So they approached us to help them grow and recover their lost organic traffic.

Our Winning SEO Strategy / Action Steps

- Technical SEO Audit & Fix

- GSC Audit & Fix

- Index Coverage Trends & Crawl Errors

- Page with redirect

- Duplicate without user-selected canonical

- Not found (404)

- Crawled – currently not indexed

- Soft 404

- Alternate page with proper canonical tag

- Index Coverage Trends & Crawl Errors

- Deindexed Staging Site & Pages

- Ensure HTTPS – set htaccess forced redirected to the preferred domain

- Created/Optimized robots.txt

- Created/Optimized Sitemaps

- Assigned self-referencing canonical tags to each page

- Fixed Mobile Usability/Mobile Friendliness Issues

- Schema Markup Implementation

- Video Schema Markup

- Recipe Schema Markup

- Fixed Orphan Page Issues Using HTML Sitemap

Let’s dive in,

Our first and most crucial step was conducting a thorough site audit that helped us identify the cause of poor crawling, rendering, and indexing. We recommended fixing critical issues in a site to make sure the site is technically healthy. It’s important to note that technical SEO audits are typically part of longer-term engagements.

Besides, we performed a Google Search Console (GSC) audit, which comprises a powerful combination of crawling and indexing analysis. In addition, the GSC contains several crucial reports that we used to identify and fix issues that could adversely affect organic search performance.

We typically crawled a site multiple times and multiple ways during an audit. Since diagnosing technical issues is not enough only using tools, we performed a thorough manual audit to identify the culprit. For example, we used several tools for crawling sites, each fitting a specific purpose.

Other critical issues were with the staging pages indexation on search results which were creating massive content duplication issues. Since we didn’t want the staging site to appear on google search, we recommend them to deindexed all those pages.

Likewise, we had to ensure that HTTPS – set htaccess forced redirected to the preferred domain. But when we assessed multiple versions i.e www, non-www, HTTP, and non-HTTPs of the site were still browseable. We recommended them to fix only one of these should be accessible in a browser. The others should be 301 redirected to the canonical version.

The next thing was missing the sitemap. We guided them to create and optimize the sitemap which helps to lists their website’s most important pages; making sure search engines can find and crawl them.

Furthermore, there were tons of orphan pages with no single internal linking pointing to them which avoided them from being indexed. We guided them to fix orphan pages issues using an HTML sitemap. Thus, helps search engines to find and crawl them and even enables lost human users to find a page.

Moving on, upon auditing the page, none of the pages had a canonical tag assigned. We guided them to assign self-referencing canonical tags on each page. Self-referencing canonical tags allow us to specify which URL we want to have recognized/preferred version. As recommended by Google, we used self-referencing canonicals as a part of the best practice of technical SEO.

Likewise, we had identified some mobile usability/mobile friendliness issues with mobile devices such as smartphones or tablets and guided to fix them as soon as possible. For example, we made sure that all the pages used relative width and proportional position values for CSS elements to fix this error. We also ensured that images could scale as well.

Furthermore, we created and optimized robots.txt. It helps crawlers to crawl what needs to be crawled. For example, we can tell web crawlers to crawl pages and do not crawl some pages.

Likewise, we maximized content discoverability on Google by adding recipe structured data on recipe posts, videos and submitting video sitemaps frequently. Having a plethora of recipe pages across the site, they needed to reach the broader foodie community with how-tos video content.

We adopted the best practices as per Google guidelines and the implementation was straightforward. However, it allowed the site to be eligible to rank across organic video features. Over time optimizing recipe schema and indexing drove significantly better exposure and user discoverability in search and discovery.

Results

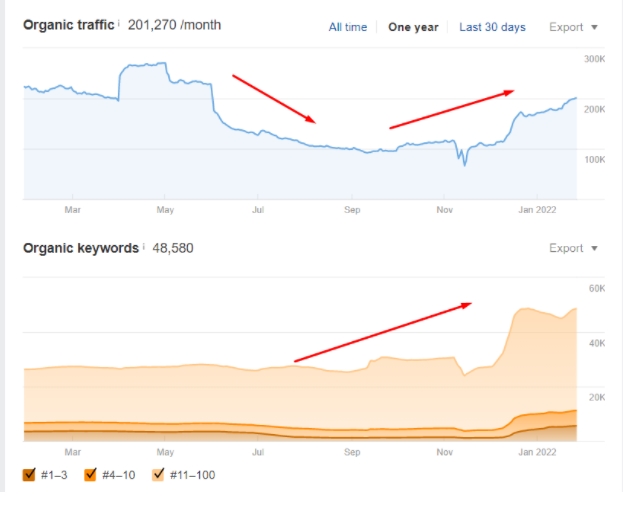

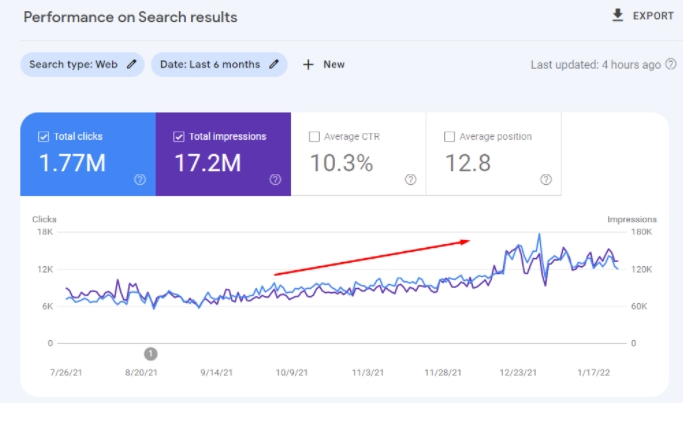

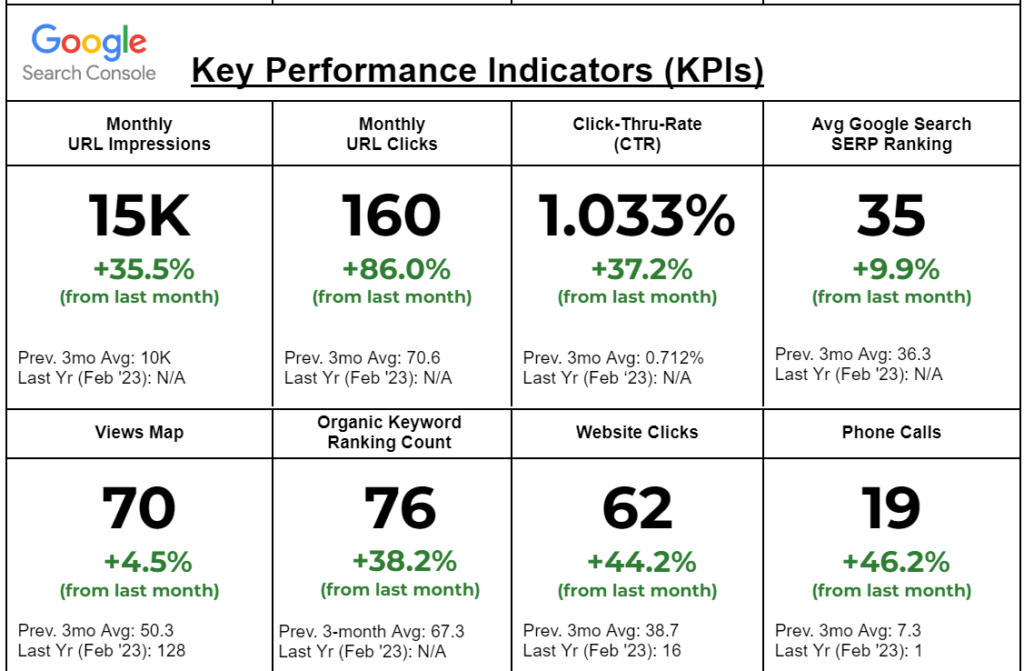

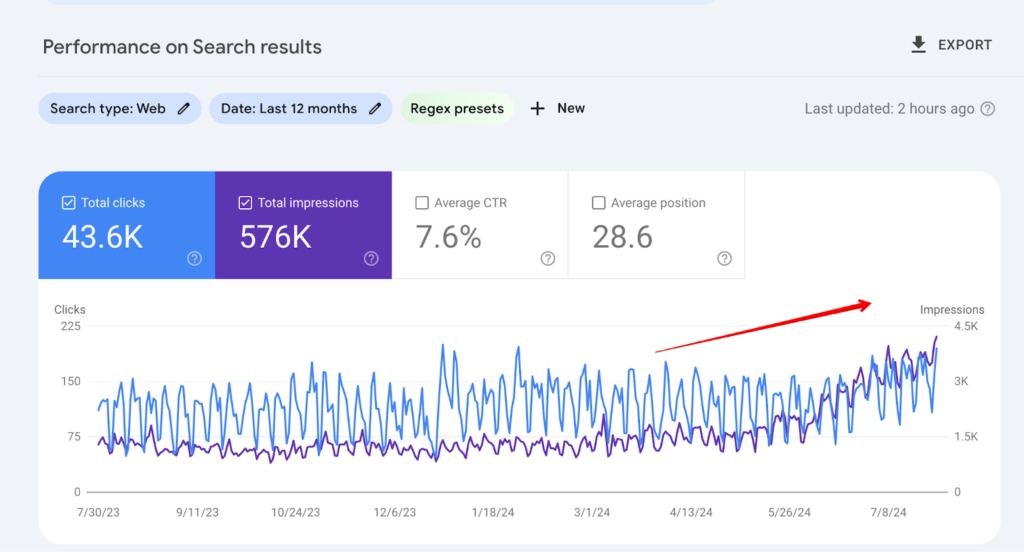

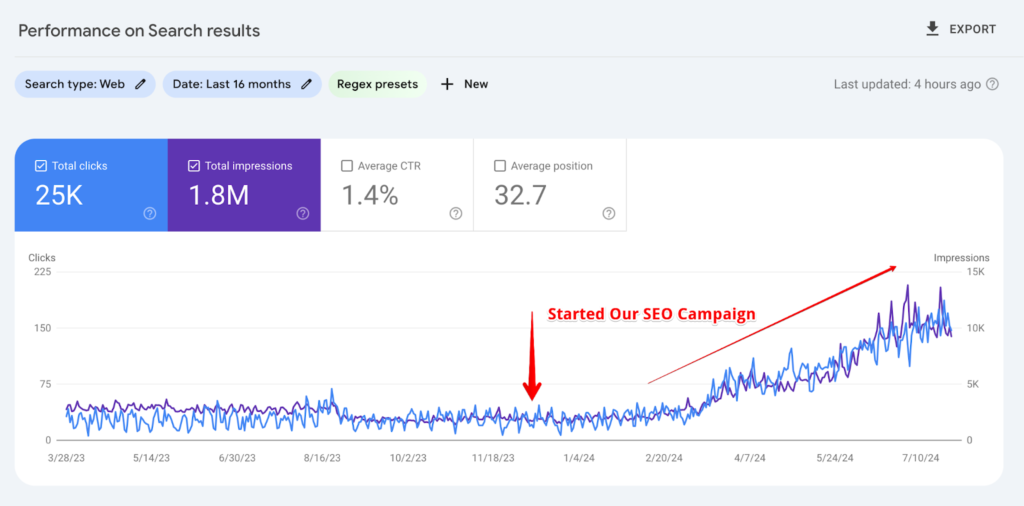

We achieved massive results in a short time. Within 4 months of working with us, the client has recorded a drastic surge in organic traffic, and index coverage trends showed a massive indexation of pages. As a result, we recovered their organic traffic, and lucrative keywords and traffic kept surging over time.

- Time Frame: Sep' 2021 - Dec' 2021

Related Case Studies

Law Firm SEO: How We Tripled (3X) Organic Leads / Calls in Just 5 Months

Local SEO: How We Increased Organic Search Traffic by 330% and Doubled Leads in Just 7 Months

eCommerce SEO: 3X the Organic Leads/Sales with 300% Growth in Organic Traffic

LET'S INCREASE YOUR SALES

Claim your $1,000 Audit for FREE by telling us a little about yourself below. No Obligations, No Catches.

NEWSLETTER

Join 5,000+ businesses smashing revenue goals with our weekly insights.