1. What is an SEO audit?

In a simple sense, an SEO site audit is a process of evaluating search engine friendliness of a website. Like how well a site is performing in a search engine, factors affecting the site health and performance and what areas can be improved that enhance the efficiency and visibility of a website.

A simple analogy could be like going to a hospital for a whole body checkup. After the arrival of reports, you followed to an assigned doctor. Doctors evaluate your overall report and in case there is a sign of any deficiency, the doctor will tell the best possible way to fight against it.

2. Why perform an SEO site audit?

3. How often should you audit your site for SEO?

4. What are some of the best SEO audit tools?

While there are many tools you can use to SEO audit a website, the best way is to either perform the audit by following a guide (you are reading now) or hire a digital marketing agency that offers SEO Site Audit service. SEO audit is quite technical and it is always recommended to hire an expert or an online marketing agency.

Here are some of the best tools that you need for an effective SEO audit process:

Free SEO Audit Tools

Top Premium SEO Audit Tools

5. Why use premium SEO tools?

6. How to start an SEO Audit?

Step 1: Make sure only a one canonical version of URL is browseable

But first, you need to check that only one canonical version of your site is browseable. Technically having multiple URLs for the same pages leads to duplicate content issues and negatively impact your SEO performance.

Consider all the ways someone will type your website address into a browser. For example:

http://yourdomain.com http://www.yourdomain.com https://yourdomain.com https://www.yourdomain.comOnly ONE of these should be accessible in a browser. For instance, https://yourdomain.com is a preferred version.

Even more, for a WordPress user, you will find a configuration setting right over (Setting > General >Make WordPress Address URL and Site Address URL to a preferred version)Step 2. Start a website crawl

Using SEMrush

SEMrush is one of the world’s most popular SEO tools offering 40+ modules for enhancing online visibility and marketing analytics.

# Go to Site Audit Tools

# Step 2: Go to the project section and fill with your domain and project name. Make sure to exclude hypertext protocols like HTTP and https.

#After successfully creating a project then go to a project dashboard and click setup.

# After successful audit you will show a report as given below

Using Ahrefs

# Go to a Site Audit

(Site Audit > New Project > Enter domain in the ‘Scope & seeds’ section > Untick 301 redirects)# Hit “Next.”

For the most part, you can leave the rest of the settings as they are.

But I do recommend toggling the Check HTTP status of external links and Execute JavaScript options (under Crawl settings) to “on.”

When you’re done, hit “Create project.” Ahrefs’ Site Audit will start working away in the background while we continue with the audit.

Using Screaming Frog

To make use it first downloads a screaming frog in a desktop version.

#After installing on your desktop, you will see an interface as shown below.

#Fill in the URL( preferred version of URL) section and hit start

Alright, you learned to run full site audit using various tools.

Make sure to use only one premium SEO tool for site audit. I have been using SEMrush for years. I am pretty comfortable with that. Use what makes you comfortable.

Now have a quick overview of site audit report. In this phase, it’s time for the optimization and implementation. After gathering audit reports prioritize a quick fix and do it accordingly.

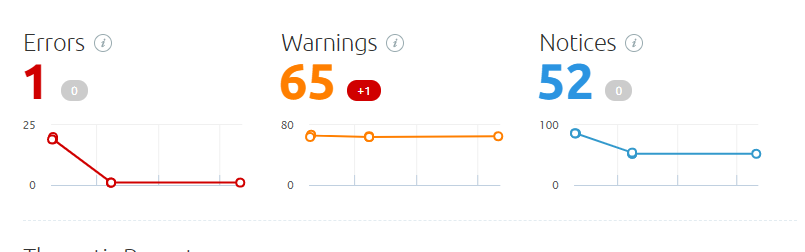

So you might be wondering the best possible way to re-optimize your site for SEO. First, make sure to prioritize optimization after analyzing site audit reports. Many premium SEO tools usually categorize site audit reports as errors, warnings, notices or something else.

7. How to fix website SEO Issues?

Interpreting Issues

Errors(Max Priority)

Warnings

Notices

8. What are the key elements of a site audit?

Performing On-Page SEO Audit

Check Missing Title Tags

How to fix it?

Check Duplicate Title Tags

How to fix it?

Check Duplicate Content

First of all, Google will typically show only one duplicate page, filtering other instances out of its index and search results, and this page may not be the one you want to rank.

How to fix it?

- Add a rel=”canonical” link to one of your duplicate pages to inform search engines which page to show in search results.

- Content consolidation with original one: Use a 301 redirect from a duplicate page to the original one.

- Use a rel=”next” and a rel=”prev” link attribute to fix pagination duplicates

- Instruct GoogleBot to handle URL parameters differently using Google Search Console.

- Provide some unique content on the webpage.

- You can try a content duplicate checker such as Copyscape to ensure the quality of the content.

Check Broken Internal Link

How to fix it?

Check Broken Internal Images

How to fix it?

- If an image is no longer located in the same location, change its URL

- If an image was deleted or damaged, replace it with a new one

- If an image is no longer needed, simply remove it from your page’s code

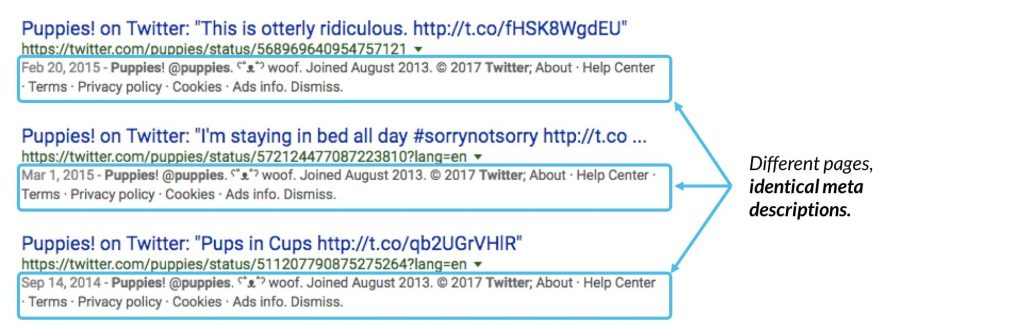

Check Duplicate Meta Description

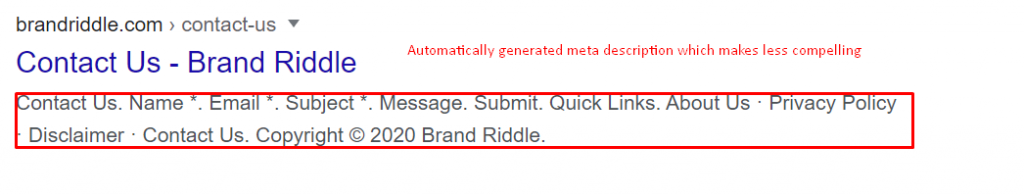

Image Source: Moz

A <meta description> tag is a short summary of a webpage’s content that helps search engines understand what the page is about and can be shown to users in search results.

Duplicate or exact meta descriptions on different pages mean a lost opportunity to use more relevant keywords.

Also, duplicate meta descriptions make it difficult for search engines and users to differentiate between different web pages. It is better to have no meta description at all than to have a duplicate one

How to fix it?

Provide a unique, relevant meta description for each of your webpages. For information on how to create effective meta descriptions, please see this Google article.

You can also view tips on writing a unique and compelling meta description.

Check Short Title Element

How to fix it?

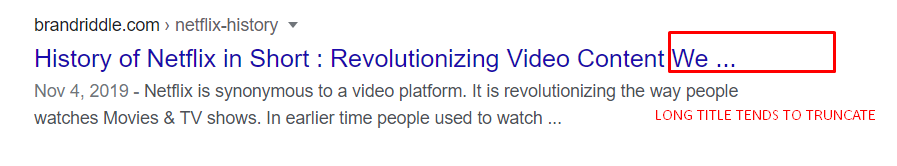

Check Long title element

How to fix it?

Check Missing H1

Check Missing Meta Description

A good description helps users know what your page is about and encourages them to click on it. If your page’s meta description tag is missing, search engines will usually display its first sentence, which may be irrelevant and unappealing to users.

How to fix it?

Check To many on-page links

How to fix it?

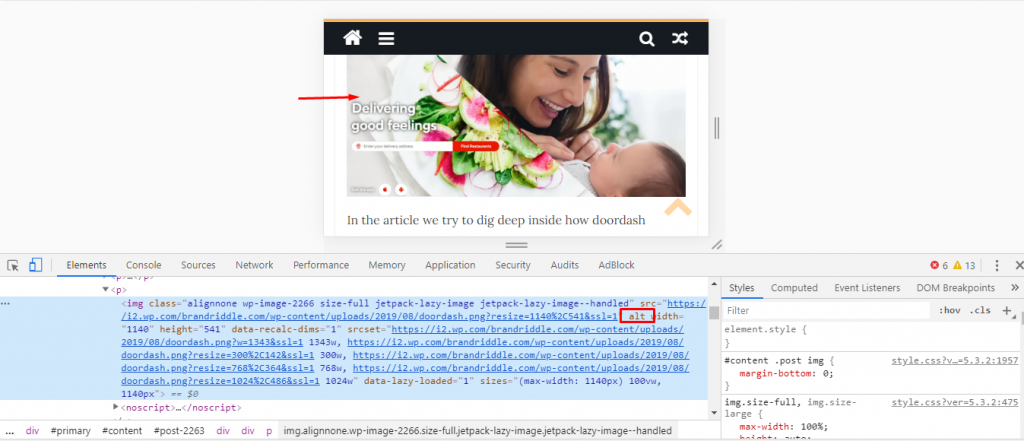

Check Missing Image ALT attributes

How to fix it?

Check Too many URLs parameters

How to fix it?

- Try to use no more than four parameters in your URLs

- Avoid using underscore(_) in URLs

- Using hyphens (-) is a best practice

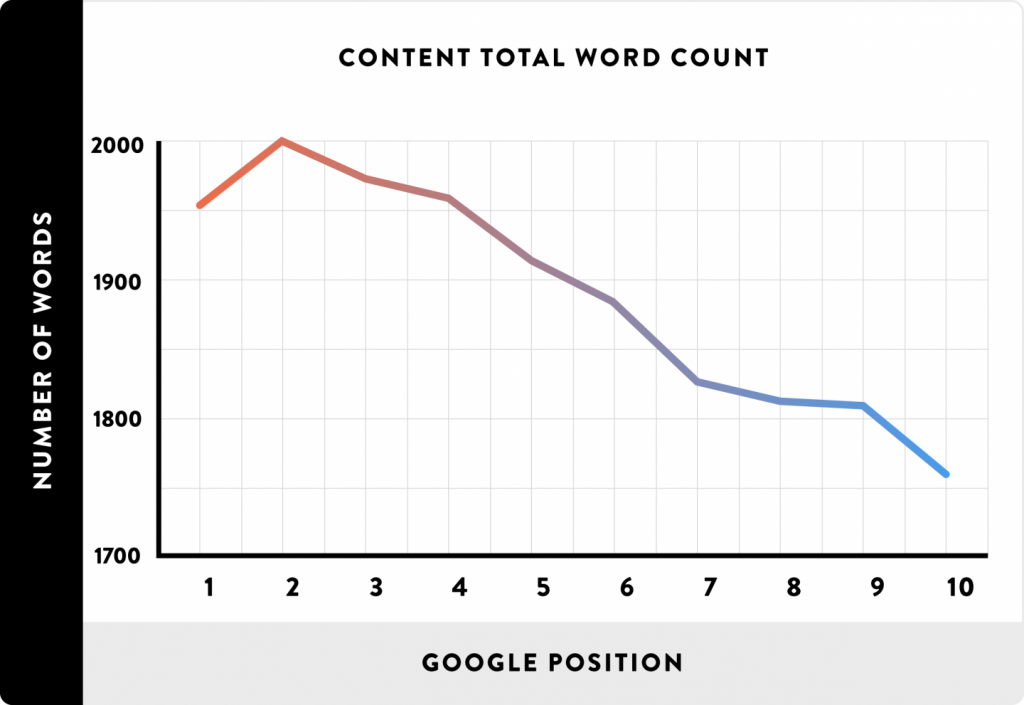

Low word count

Search engines prefer to provide as much information to users as possible, so pages with longer content tend to be placed higher in search results, as opposed to those with lower word counts.

For more information, please view this video.How to fix it?

Check Nofollow attribute in outgoing internal links

That’s why it is not recommended that you use nofollow attributes in internal links. You should let link juice flow freely throughout your website. Moreover, unintentional use of nofollow attributes may result in your webpage being ignored by search engine crawlers even if it contains valuable content.

How to fix it?

Check Sitemap.xml not specified in robots.txt

How to fix it?

Check Multiple H1 Tags

How to fix it?

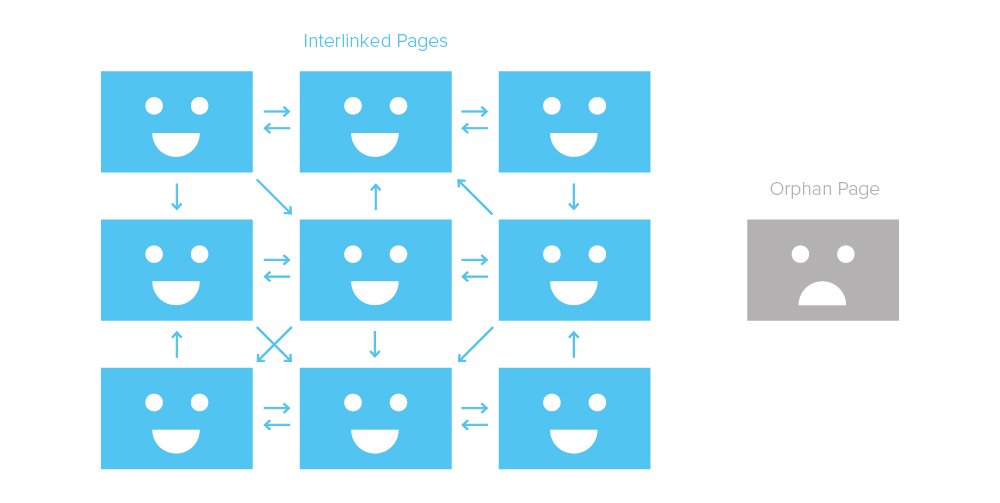

Check orphaned pages

How to fix it?

- If a page is no longer needed, remove it

- If a page has valuable content and brings traffic to your website, link to it from another page on your website

- If a page serves a specific need and requires no internal linking, leave it as is

Check page crawl depth

How to fix it?

Performing a Technical SEO Audit

Check 5XX Errors

How to fix it?

Check 4XX Errors

This will in turn lead to a drop in traffic driven to your website.

How to fix it?

If a web page returns an error, remove all links leading to the error page or replace it with another resource.

To identify all pages on your website that contain links to a 4xx page, click “View broken links” next to the error page if you are using SEMrush.

You can also examine it using google search console from index coverage issue tab.

Check for Page Not Crawled

- Your site’s server response time is more than 5 seconds

- Your server refused access to your webpages

How to fix it?

Check for Invalid robots.txt file

If your robots.txt file is poorly configured, it can cause you a lot of problems.

Web Pages that you want to be promoted in search results may not be indexed by search engines, while some of your private content may be exposed to users.

So, one configuration mistake can damage your search rankings, ruining all your search engine optimization efforts.

How to fix it?

For information on how to configure your robots.txt, please see this article.

Check for Invalid Sitemap.XML format

How to fix it?

For information on how to configure your sitemap.xml, please see this article.

Check www resolve issues

How to fix it?

Large HTML page size

A webpage’s HTML size is the size of all HTML code contained on it. A page size that is too large (i.e., exceeding 2 MB) leads to a slower page load time, resulting in a poor user experience and a lower search engine ranking.

How to fix it?

Check Issue with mix content

How to fix it?

Only embed HTTPS content on HTTPS pages. Replace all HTTP links with the new HTTPS versions. If there are any external links leading to a page that has no HTTPS version, remove those links.

Check For Neither canonical URL nor 301 redirect from HTTP homepage

If you’re running both HTTP and HTTPS versions of your homepage, it is very important to make sure that their coexistence doesn’t obstruct your SEO. Search engines are not able to figure out which page to index and which one to prioritize in search results.

As a result, you may experience a lot of problems, including pages competing with each other, traffic loss and poor placement in search results. To avoid these issues, you must instruct search engines to only index the HTTPS version.

How to fix it?

Redirect your HTTP page to the HTTPS version via a 301 redirect. Mark up your HTTPS version as the preferred one by adding a rel=”canonical” to your HTTP pages

Check For Redirect Chain Loops

Long redirect chains and infinite loops lead to a number of problems that can damage your SEO efforts. They make it difficult for search engines to crawl your site, which affects your crawl budget usage and how well your web pages are indexed, slows down your site’s load speed, and, as a result, may have a negative impact on your rankings and user experience.

How to fix it?

The best way to avoid any issues is to follow one general rule: do not use more than three redirects in a chain.

If you are already experiencing issues with long redirect chains or loops, we recommend that you redirect each URL in the chain to your final destination page.

We do not recommend that you simply remove redirects for intermediate pages as there can be other links pointing to your removed URLs, and, as a result, you may end up with 404 errors.

Check Broken Canonical URLs

By setting a rel=”canonical” element on your page, you can inform search engines of which version of a page you want to show up in search results. When using canonical tags, it is important to make sure that the URL you include in your rel=”canonical” element leads to a page that actually exists.

Canonical links that lead to non-existent webpages complicate the process of crawling and indexing your content and, as a result, decrease crawling efficiency and lead to unnecessary crawl budget waste.

How to fix it?

Check Multiple Canonical URLs

How to fix it?

Check For Too large sitemap.xml

How to fix it?

Break up your sitemap into smaller files. You will also need to create a sitemap index file to list all your sitemaps and submit it to Google.

Don’t forget to specify the location of your new sitemap.xml files in your robots.txt.

For more details, see this Google article

Check Slow page (HTML) load speed

Page (HTML) load speed is one of the most important ranking factors. The quicker your page loads, the higher the rankings it can receive. Moreover, fast-loading pages positively affect user experience and may increase your conversion rates.

Please note that “page load speed” usually refers to the amount of time it takes for a webpage to be fully rendered by a browser. However, crawler only measures the time it takes to load a webpage’s HTML code – load times for images, JavaScript and CSS are not factored in.

How to fix it?

The main factors that negatively affect your HTML page generation time are your server’s performance and the density of your webpage’s HTML code.

So, try to clean up your webpage’s HTML code. If the problem is with your web server, you should think about moving to a better hosting service with more resources.

Broken External Links

How to fix it?

Performing a Content Audit

9. How to perform a solid content audit?

Tools required for content audit

There are a lot of content audit tools but it makes more sense to analyze manually. Premium tools like SEMrush, Ahrefs, Screaming frog are cool. But in this section I prefer you to analyze using google tools:

- Google Search Console

- Google Analytics

Great Content

How to optimize more?

- If possible, you can make it more compelling adding such as infographics, statistics, complete guide and so on

- If it is topically relevant, internally link from those posts to top-performing posts

- Target using different keyword-rich anchor text(intended keyword) to enhance ranking.

Salvageable(Editable)

How to optimize more?

- If possible, you can update or rework for further optimization

- If it is topically relevant, internally link from top performing posts

- At the time of internal linking, target using different keyword rich anchor text(intended keyword) to enhance ranking

Unsalvageable(Non-editable)

How to optimize more?

- If it has a thin content either rewrote them or deleted those pages. Make a 301 redirection from those deleted pages to better pieces of contents.

- If a site has a number of thin content with similar kinds of posts, content consolidation works the best. After consolidation make a 301 redirection to a better content. Helpful for avoiding keyword cannibalization and for crawl budget optimization.

- It is very important to check your website for underperforming pages. If a page has valuable content but is not linked to by another page on your website, it can miss out on the opportunity to receive enough link juice and ranking. Make sure to internally link from top performing pages or topically relevant pages.

Performing Backlink Audit

Backlinks (also known as “inbound links”, “incoming links” or “one way links”) are links from one website to a page on another website.

Backlinks are basically votes from other websites. Each of these votes tells search engines: “This content is valuable, credible and useful”. That’s why backlinks are regarded as one of the google top ranking factors.

10. Why to do a backlink audit?

- To remove toxic backlinks from your site to improve the site’s SEO health.

- To find new backlink opportunity

- To fix broken backlinks

# Open your google search console and select your site property

#Go to links tab# Analyze all backlinks either using Moz Explorers or SEMrush.

Analyze your links:

11. What to do if you are getting a link from a spammy website?

12. How to use google disavow tools?

13. Final Thought

In case you are seeking for complete audit service, Orka Socials is with you. If you have any questions about the audit process, then please leave a comment below.